When SQL Server AlwaysOn for HADR is not always suitable

Contrary to popular perception on how smooth SQL AlwaysOn can be for HADR scenarios, my experiences with it has been not so great when it comes to DR situation. AlwaysOn will need meticulous design, planning and DR procedures (TEST, TEST, and TEST) to ensure smooth failover/failback.

I embarked on designing and implementing SQL 2012 HADR solution across two sites based on white paper FCI+AG. My implementation was 2 node SQL VM FCI at each site and had additional complexities –

- HyperV Virtualization

- Only Guest Failover Clustering

- SQL Disks presented directly to the guest

- Mount Points to circumvent limited drive letters

You might be asking me WHY FOR HEAVENS SAKE? Yes I did as well. It was a strict customer requirement to virtualize everything. Virtualizing also helped reduce SQL Licensing cost (for core counts). FCI will require Guest clustering hence the disks need to be direct attached to the guest from a shared SAN with multipath IO. Redundancy was built in at each physical and logical layers sufficiently (rather excessively).

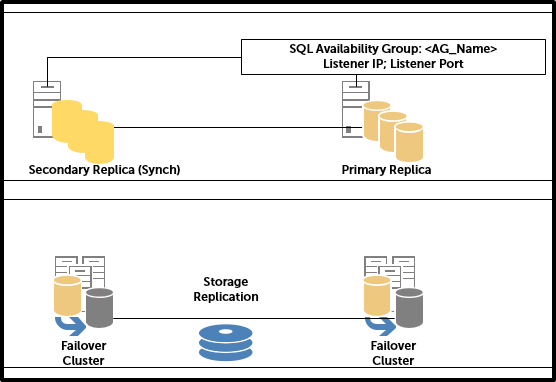

Figure 1: High Level SQL Solution

Note: Storage replication was strictly to transport backups between site

Although it was complex I successfully implemented it and tested the failover and failback within the stipulated RPO/RTO. But soon after we started experiencing intermittent disruptions at host level resulting in unexpected power on/off of SQL nodes. This resulted in some databases on DR appear in RECOVERY PENDING status. Resolution for which is to remove database from AG or recreate the AG. Important fact is the server instance on which the secondary replica is hosted will be unavailable in such case. Your upstream applications are using AG Listener and any changes or reverting to instance name will have further rework, testing and downtime.

Lesson learnt –

- You may want a second local server instance to host a secondary replica. This should help you restore and recreate AG Databases without having to entirely rely on second site. But beware of the licensing implications and cost of doubling compute, storage and network resources at each site

- Alternatively completely forgo FCI

- Look at further abstraction from AG such as SQL ALIAS for upstream applications

- Have a strong SQL DBA monitor and oversee SQL environment and SQL DR procedures instead of your platform guys

Question is though does it really pay to have AlwaysOn HADR? Using Storage Replication technologies for syncing backups/Tlogs and manual DR restore maybe smoother in some cases. What are your thoughts?

A portable generic maintenance plan

Involved in a large scale virtualisation, consolidation and migration project lately and have been automating as much as possible for SQL installs. Due to the sheer volume of the number of SQL servers, high degree of automation is desirable for SQL infrastructure.

I intended to use Codeplex SQL Server Fine Build by Ed Vasie as a common framework and guidance for installation and configuration of SQL servers. However due to various reasons was unable to use it. I highly recommend using it for guidance even if you are not interested in the scripted unattended installs.

Finebuild provides SQL jobs for routine maintenance activities. Monitoring and maintaining of multiple jobs on few 100 servers could be challenging. Instead I choose single Maintenance Plan approach to carry out maintenance tasks.

Issue

A generic maintenance plan for any new SQL server- I found there is not much guidance on how to render an SSIS package generic with regards to connection and execution context. Also when a SSIS package is exported, associated jobs and any referential integrity constraints are not exported.

Solution

Instead of discussing all the issues I ran into, I will simply take you thro’ a few steps to create and port your SSIS package

You will need two instances of SQL Server to test this.

Source Server – A default or named instance of SQL . Also will need SSIS installed with BIDS.

Example – SERVERA

Target Server – A default or named instance of SQL. SSIS optional

Example – SERVERB\Inst1

- Create a single maintenance plan with a one encompasing (single) schedule by going to SSMS > Connect Server > Management > Maint Plan > Maint Plan Wizard. Please refer to MSDN articles for maint plan creation. Note – My plan was called GenericMaint

- Now connect to SSIS from SSMS > Object Explorer > Integration Services. Expand Stored Packages > MSDB > Right click on GenericMaint > Export Package…

- On the export Package dialog, choose Pacakage location as File System and Pacakage path as <your preferred location>. This is in order to view, edit and export the package to ther servers

-

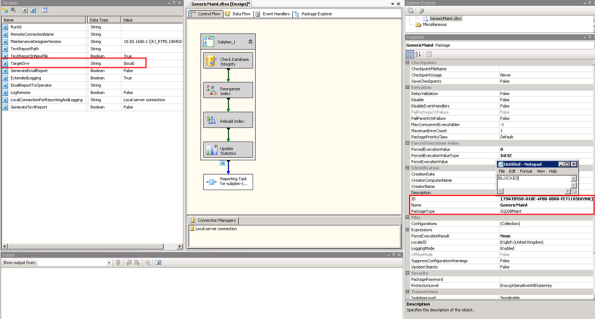

Using BIDS open the SSIS pacakage and add a new User variable called TargetSrvr. Also copy the Package ID higlighted on the screen below for later use.

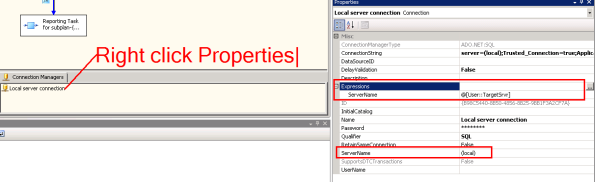

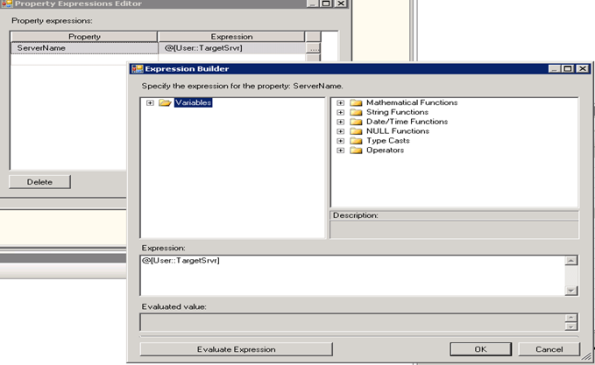

- Parametrise the Server context by opening the Connection Manager > Properties > Expand Expressions by clicking on the ellipsis > Choose ServerName from Property dropdown > Expression >

- Apply changes and save the package

- Make note of the SubplanId by highlighting the Subplan_1 as seen below and ID from properties window as highlighted below.

-

Export the GenericMaint.dtsx plan to destination servers. Note – SSIS packages can be exported and imported only if the server has Integration Services installed on it. Your account will need requisite permissions on the server and SQL instance to perform an export/import.

-

Export/Import using DTUtil. Open SSMS > New Query window to <<Your Target Server>>

- Change to SQLCMD mode > SSMS Menu> Query > SQCMD Mode. Copy the code below to Query window

— SCRIPT to Export SSIS package from 127.0.0.1 server to a target server

— Author: Ajith@Dell 08/06/2011

–/FILE “\\127.0.0.1\it\DTS Packages\GenericMaintPlan.dtsx” – Location of SSIS package

–/DestServer <<SQL Instance Name>> – SQL Instance name

–/COPY SQL;”Maintenance Plans/GenericMaintPlan” – Target location for package to be copied

!!dtutil /Q /FILE “\\127.0.0.1\<<Your Package location>>\DTS Packages\GenericMaintPlan.dtsx” /DestServer <<SQL Instance Name>> /COPY SQL;”Maintenance Plans/GenericMaintPlan”

DTUtil is reusable and beneficial in large scale deployments. Alternatively copy the package to your target server by Open SSMS > Connect to Integration Services > Choose source server > Expand Source Server -> Stored Packages > Maintenance Plans > Right click > Export Package…

Export the package to the target SQL Server – Package Location: SQL Server; Server: <<Target Server>>; Package Path: Maintenance Plans

Click OK to complete the save and export

- Now you will need to create a job to execute the maintenance plan SSIS package.

Important – Exporting a maintenance plan does not export associated jobs. - Run the following script on each target server to create the job. Highlighted sections of the script should be eyeballed and changed if required. Some of the highlighted sections in the script are self-explanatory where critical changes were made.

USE [msdb]

SET

ANSI_NULLS

ON

GO

SET

QUOTED_IDENTIFIER

ON

GO

— SCRIPT to create a job to execute generic SSIS package

— Author: Ajith@Dell 08/06/2011

–#region DropSPROC-DropJob

IF

EXISTS

(SELECT * FROM

sys.objects

WHERE

object_id =

OBJECT_ID(N'[dbo].[DropJob]’)

AND

type

in

(N’P’, N’PC’))

BEGIN

DROP

PROCEDURE [dbo].[DropJob]

END

–#endregion

GO

–#region CreateSPROC-DropJob

USE [msdb]

SET

ANSI_NULLS

ON

GO

SET

QUOTED_IDENTIFIER

ON

GO

CREATE

PROC [dbo].[DropJob]

@JobName AS

VARCHAR(200)

=

NULL

AS

DECLARE @msg AS

VARCHAR(500);

–Author Clay McDonald – http://claysql.blogspot.com/2009/07/cant-delete-job-microsoft-sql-server.html

IF @JobName IS

NULL

BEGIN

SET @msg =

N’A job name must be supplied for parameter @JobName.’;

RAISERROR(@msg,16,1);

RETURN;

END

IF

EXISTS

(

SELECT subplan_id FROM msdb.dbo.sysmaintplan_log WHERE subplan_id IN

(

SELECT subplan_id FROM msdb.dbo.sysmaintplan_subplans WHERE job_id IN

(SELECT job_id FROM msdb.dbo.sysjobs_view

WHERE name = @JobName)))

BEGIN

DELETE

FROM msdb.dbo.sysmaintplan_log WHERE subplan_id IN

(

SELECT subplan_id FROM msdb.dbo.sysmaintplan_subplans WHERE job_id IN

(SELECT job_id FROM msdb.dbo.sysjobs_view

WHERE name = @JobName));

DELETE

FROM msdb.dbo.sysmaintplan_subplans WHERE job_id IN

(SELECT job_id FROM msdb.dbo.sysjobs_view

WHERE name = @JobName);

EXEC msdb.dbo.sp_delete_job

@job_name=@JobName, @delete_unused_schedule=1;

END

ELSE

IF

EXISTS

(

SELECT subplan_id FROM msdb.dbo.sysmaintplan_subplans WHERE job_id IN

(SELECT job_id FROM msdb.dbo.sysjobs_view

WHERE name = @JobName))

BEGIN

DELETE

FROM msdb.dbo.sysmaintplan_subplans WHERE job_id IN

(SELECT job_id FROM msdb.dbo.sysjobs_view

WHERE name = @JobName);

EXEC msdb.dbo.sp_delete_job

@job_name=@JobName, @delete_unused_schedule=1;

END

ELSE

BEGIN

EXEC msdb.dbo.sp_delete_job

@job_name=@JobName, @delete_unused_schedule=1;

END

GO

–#endregion

–#region Scripted Job for GenericMaintPlan

BEGIN

TRAN

DECLARE @myjobname NVARCHAR(256), @planname NVARCHAR(256)

SET @planname =

N’GenericMaintPlan’

SET @myjobname =

N’DBA-GenericMaintPlan.Subplan_1′

DECLARE @retjobId BINARY(16)

DECLARE @ReturnCode INT, @schedule_id_Out INT

SELECT @ReturnCode = 0

DECLARE @jobscheduleid BINARY(16)

SELECT @jobscheduleid =

NEWID()

DECLARE @theplanid UNIQUEIDENTIFIER, @thesubplanid UNIQUEIDENTIFIER, @subplanname NVARCHAR(56)

DECLARE @cmdtxt NVARCHAR(MAX)

SET @theplanid =

‘F99D7F9E-848A-464C-86C7-80642561CFFC’

–Should be brought over from SSIS Package see package properties

SELECT @thesubplanid=‘8C8459C8-D38E-4E4F-BB9A-4E4E45C4A69D’

–Should be brought over from SSIS Package’s subplanid see package properties

SELECT @subplanname =

N’Subplan_1′

SET @cmdtxt=N’/SQL “Maintenance Plans\’+ @planname +‘” /SERVER “$(ESCAPE_NONE(SRVR))” /CHECKPOINTING OFF /SET “\Package\Subplan_1.Disable”;false /SET “\Package.Variables[User::TargetSrvr]”;”$(ESCAPE_NONE(SRVR))” ‘

IF

EXISTS

(SELECT job_id FROM msdb.dbo.sysjobs_view

WHERE name = @myjobname)

BEGIN

EXEC msdb.dbo.DropJob

@myjobname

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

EXEC @ReturnCode=msdb.dbo.sp_add_job

@job_name=@myjobname,

@category_id=3, @job_id = @retjobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode =msdb.dbo.sp_add_jobstep

@job_id=@retjobId, @step_name=N’Subplan_1′,

@step_id=1, @subsystem=N’SSIS’,

@command=@cmdtxt

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode =msdb.dbo.sp_update_job

@job_id=@retjobId,

@enabled=1,

@start_step_id=1,

@category_name=N’Database Maintenance’,

@owner_login_name=N'<<Domain>>\<<User Name>>’

–Update as required

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver

@job_id=@retjobId

IF

EXISTS(SELECT 1 WHERE

@@VERSION LIKE

‘%SQL Server 2008%’)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule

@job_id=@retjobId, @name=@myjobname,

@enabled=1,

@freq_type=8,

@freq_interval=1,

@freq_subday_type=1,

@freq_subday_interval=0,

@freq_relative_interval=0,

@freq_recurrence_factor=1,

@active_start_date=20110610,

@active_end_date=99991231,

@active_start_time=0,

@active_end_time=235959,

@schedule_uid=@jobscheduleid,

@schedule_id=@schedule_id_Out OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

ELSE

BEGIN

PRINT

‘Non 2008 version detected’

EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule

@job_id=@retjobId, @name=@myjobname,

@enabled=1,

@freq_type=8,

@freq_interval=1,

@freq_subday_type=1,

@freq_subday_interval=0,

@freq_relative_interval=0,

@freq_recurrence_factor=1,

@active_start_date=20110610,

@active_end_date=99991231,

@active_start_time=0,

@active_end_time=235959,

–@schedule_uid=@jobscheduleid, — @schedule_uid not supported for 2005

@schedule_id=@schedule_id_Out OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

EXEC @ReturnCode=msdb.dbo.sp_attach_schedule

@job_id=@retjobId,@schedule_id=@schedule_id_Out

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

–The following step was added to overcome job execution failure.

EXEC @ReturnCode=msdb.dbo.sp_maintplan_update_subplan

@subplan_id =@thesubplanid,

@plan_id =@theplanid,

@name =@subplanname,

@description =‘Created by CREATE_JOB_DBA-GenericMaintPlan…SQL’,

@job_id =@retjobId,

@schedule_id =@schedule_id_Out,

@allow_create =1 — Allow create =1 enables creating of subplans where SSIS packages are imported

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

COMMIT

TRANSACTION

GOTO EndSave

QuitWithRollback:

print

‘error occured’

IF (@@TRANCOUNT > 0) ROLLBACK

TRANSACTION

EndSave:

–#endregion

- Verify by executing the job

Disclaimer- I have done very limited testing on the scripts. I have found the scripts are working satisfactorily on SQL Server 2005, 2008 and R2. Use it at your own risk. Feel free to make any amendments as required.

If you have any improvements or suggestions please feel free to contact me or comment here….

Agile Projects like – “A Christmas Story”

I have taken a few days off from work for Christmas and just watched “A Christmas Story” with my little one. It is not the first time I have watched this movie. What struck me this time was an analogy I could make – A decent family run by an able mother (Scrum Master) to see through a merry and happy Christmas (successful project delivery) in the face off all the madness around her. Ralphie asking for a BB gun (Developers insisting on shiny new IDEs and upgrades), Randy his brother just won’t eat (Operations not ready to deploy every time). The Old Man (Product Owner) who insists on a ridiculous leggy lamp in the window sill. With sheer shrewdness she could manage to destroy the lamp, ensured Randy eats at the table, puts her foot down when Ralphie blurbs the F word. Yet allows to be manipulated when the old man decides to get Ralphie the BB gun.

Organizations should not simply allow individuals who call themselves Scrum Masters to steer the projects, but should ensure they are good mothers to look after the family. Although it is true with any methodology, I think with Agile practices its paramount as they gather around her for breakfast (Daily Scrum). Families who do not sit down for breakfast or dinner sometimes also still seem to function normally. But on closer inspection you will notice many shortcomings in behaviours, virtues and personas.

From my own observations of many teams practicing agile are usually young and apt at adapting to changes quickly. Most despise the longer route of fully understanding the scope before starting the work. In other words generations that have grown up with “Instant Gratification” mentality and believe in talk is cheap and show me the goods. A good Scrum Master will make all the difference for the success or failure of such a team even though he/she is not the leader.

Why we jumped on ALM/TFS 2010 bandwagon

Back in May 2010, I sat down with my colleagues and CTO of the company to discuss Enterprise Strategy in particular – Teams using MS Stack at this company. The Product Development teams were using .NET 2.0/1.1, SQL Server 2005, consuming and exposing gateway web services. Applications ranged from Web Apps, Windows Services, Win Forms, Web Services and more. Methodologies, tooling and best practices were varied between different teams. TFS 2008 was solely used by Development teams for Work Item tracking and Source Control. Build Automation, Continuous Integration, SDLC Quality tracking and reporting were absent and sorely missed. Business Analysts, Testers, Project Managers, Architects all worked in isolation with their favourite choice of tools. However all of them collaborated using a common SharePoint site.

The Java teams were at the forefront when it came to tracking and monitoring software quality. Not that it couldn’t be done with the existing setup for MS stack, but with the new kid on the block VS 2010/TFS 2010 we decided to take a fresh look at it for our needs.

Where software was prescribed by so called “Enterprise Teams”, they soon got out-dated rather becoming roadblocks for natural evolution of applications and teams subscribing to enterprise services. Enterprise teams often lack budgets and suffer from gross negligence from business and project managers.

Our Leaders believed enabling smaller focussed teams and giving them space to innovate and deliver without losing, control, visibility and transparency was the way forward. This would empower lower ranks to make decisions freely with regards to tools, 3rd party software, Open Source etc. We all know how hard it usually is to start off with nothing. Teams and individuals easily lose sight and objectivity when they set off on a creative path. The only way we could solve this problem was to setup a Prescriptive Guidance and Reference Architecture for new teams and Greenfield projects to cross reference. That said it was only there if teams wanted to use it.

We came out of the meeting with a roadmap to embark on a Technology refresh for MS Teams starting with Team Foundation Server 2010 and Visual Studio 2010 Suite. This was an opportunity to setup a Best Practice Infrastructure, Platform, Tooling, Frameworks and more importantly Processes for teams.

What we liked about TFS 2010 and ALM back then

- Team Project Collection and Team Projects for Business and Departmental alignment

- Scalability, n-tier deployment, proxy caching for distributed teams

- Team Web Access for Work Item Tracking which is quite powerful (Not all team member will need Team Explorer like in the past)

- Project Portal (The fact that it can be organized to depict your company org chart, create cross functional teams etc.)

- TFS 2010 ALM features such as Traceability of requirements for reporting

- Out of the box Reports and Visualisation

- Build Automation – Continuous Integration, Gated Check In, Work Item integration

- Build Extensibility (Example: Build Number for Assembly versioning, PEX etc.)

- Build Verification (MS Tests, Code Coverage, Architecture Validation etc.)

- Test Management

- Process Template Customization for your needs

- Branching Visualizations

- TFS 2010 Ease of Administration

- Possibly win over Java guys with Team Explorer Everywhere integration with Eclipse IDE

and more…

In my next blog I will discuss how I went on setting up TFS 2010.

MSF AGILE v5 Sprint/Iteration Start and End Dates

Isn’t it convenient to work without any Start and End Dates for your Iteration/Sprints? A sliding 2 week window. Well the PMs don’t like it. Anyway in my org it’s a parody, whilst we Developers and Architects are developing cutting edge .NET 4.0, VS 2010, churning out ASP.NET MVC 2 apps (I know the Grails and Ruby community are sniggering), the PMs and rest of the org carry on with out-dated Office 2003 (Project, Excel) (un)Productivity suite.

TFS 2010 allows work item integration with Excel and MS Project. However the catch is you need to have Office 2010, MOSS 2010 to see and visualise seamless integration to WOW the CIOs & CTOS. All work item scheduling fields such as Start & End Dates for iteration can only be updated using MS Project as far as I know. I believe you can use Scrum Template instead of AGILE to have rich agile practice in your org.

For those of us who choose MSF AGILE v5, I found a free tool to manage AGILE projects. Telerik has a free download for TFS Work Item Manager & TFS Project Dashboard. Once you install and have played around with it –

- Launch the Work Item Manager > View > Iteration Schedule to see all the iterations organized by Area and Iterations.

- Set you Start and End dates

- Set the predecessor similar to what you do on MS project

Now your reports and charts should be more meaningful. I simply like both TFS Work Item Manager & TFS Project Dashboard. I don’t like my managers sticking Postit notes on my screen every time I walk out to the loo. I am sure there are other tools from other vendors which might be capable of but this is the one my colleague pointed me at and I held onto.

Since then I have now added Scheduling and Planning fields to Bug Work item as well TFS 2010: Adding Scheduling/Planning Hours to MSF Agile Bug Work Item Template. GO PMs Knock yourself off…

TFS 2010 – Customize Build Output changes and MS Tests

Recently I have been trying to structure the TFS output to ease deployment of artefacts. The solution contains Web Apps, Windows Services and DB Scripts. As some of you might already be aware of , TFS Server Build output does not reflect your solution and project structure.

First Jim Lab’s solution

So there are a few HACKS to edit your project files and your BUILD process templates. Once you have implemented those HACKs you should be able to output the binaries as intended. Jim Lamb’s CusomizableOutDir post covers how to edit your project files to output as intended.

BEWARE if you are using the DefaultTemplate and Workflow. Ensure you are editing the right sequence and

Edit the Workflow by navigating to Sequence > Run on Agent > Try Compile & Test…. > Run MS BUILD for Project. Edit the properties as shown in the screen below.

Note that the OutDir needs to be cleared

Further changes to the Project files need to be done to ensure the output paths are as desired. Open the .csproj in your solution using a text editor. Scroll to the PropertyGroup corresponding to the Config & platform to change the OutputPath as desired.

<PropertyGroup Condition=“ ‘$(Configuration)|$(Platform)’ == ‘Release|x86’ “>

<PlatformTarget>x86</PlatformTarget>

<DebugType>pdbonly</DebugType>

<Optimize>true</Optimize>

<OutputPath Condition=“ ‘$(TeamBuildOUtDir)’!=”“>$(TeamBuildOutDir)\Win\Console\$(MSBuildProjectName)</OutputPath>

<DefineConstants>TRACE</DefineConstants>

<ErrorReport>prompt</ErrorReport>

<WarningLevel>4</WarningLevel>

</PropertyGroup>

FIX for Web projects and _PublishedWebSite

The next problem you probably will run into is the Web projects output. The Web Projects for MSBUILD needs to be edited slightly different from the rest of the project to output into _PublishedWebSite folder. Edit your .csproj as to make the changes as follows –

<PropertyGroup Condition=“ ‘$(Configuration)|$(Platform)’ == ‘Release|x86’ “>

<PlatformTarget>x86</PlatformTarget>

<DebugType>pdbonly</DebugType>

<Optimize>true</Optimize>

<OutDir Condition=“ ‘$(TeamBuildOUtDir)’!=”“>$(TeamBuildOutDir)\Win\UI\$(MSBuildProjectName)</OutDir>

<OutputPath>bin\</OutputPath>

<DefineConstants>TRACE</DefineConstants>

<ErrorReport>prompt</ErrorReport>

<WarningLevel>4</WarningLevel>

</PropertyGroup>

MSTest and No Test Results

Now you will notice that MS Tests are not running anymore on TFS BUILDs. Another HACK to edit all MSTest project outputs using a macro suggested by Thanks to Christian Lavallee on StackOverflow.

Create a new macro in VS 2010 with shortcut Alt + F8. Add Reference to Microsoft.Build and Import in your macro. I customised the Macro to only work on Test projects as all other deployable projects needed to be hand edited for distinct OutputPath.

I edited the macro to Output all Test projs into a Tests folder as follows

Dim outputTeamBuild = propertyGroup.AddProperty(“OutputPath”, “$(TeamBuildOutDir)\Tests\$(MSBuildProjectName)”)

outputTeamBuild.Condition = “‘$(TeamBuildOutDir)’!=” ”

After completing your changes to macro save it. Now Open your solution with all the projects. Switch to Macro and Run it. Running the Macro checks out your .csproj project files and changes the OutputPath for each qualifying projects.

Now CHECKIN your solution and trigger a BUILD on the server to verify the Output

EDIT: I am now considering rolling out custom Project Templates (Web, MS Test, Win services) for our org with $(TeamBuildOutDir) changes so no macros will need to be run on the solution. I will cover it in a new post as to how I get along.

PROBLEM API restriction: The assembly <<SomeUnitTest.dll>> has already been loaded from a different location

One more Gotcha… and I am done. Interestingly after all this hard work I was pulling my hair off as I ran into API restriction: The assembly <<SomeUnitTest.dll>> has already been loaded from a different location. Fortunately I quickly realised one of our Unit Test assembly (A helper within) was referenced by another Unit test thus outputting it into that bin directory.

TIP: Do ensure your non TEST projects do not contains the reserve word TEST.

One of my project referenced by MS Test Project was named *TestHelper*. TFS Builds will attempt to run MS Tests named *Test*.dll and try to load it from all referenced projects.

I refactored the code to move all helpers out and renamed TestHelper, and then removed the reference of SomeUnitTest.dll from the consuming project. Built it again… Voila Output as intended and cleanly structured for deployment, MS Tests run fine.

Well nothing is simple right? Who would have thought I need Architecture Validation for Unit Tests as well…

Ok DevOps its all yours now…